Data science: the four stages

Friday, 19 November 2021Nowadays, when even in clinical medicine, algorithmic decision support tools, computer-assisted navigation, and surgical robots are being used, some companies continue using traditional data collecting and analyzing methods instead of gaining benefits from Data Science. Meanwhile, in the 1980s, about 1% of humankind’s data was already available in digital form.

Everything began in the summer of 1956 at Dartmouth College. Dr. John McCarthy and his contemporaries began researching artificial intelligence (AI). They thought that every aspect of learning could be defined so accurately that they could create a machine to simulate it.

Digital information technology currently accounts for 99% of data, which is predicted to be 5 zettabytes.

Modern society has access to enormous data and needs guidance to extract meaningful information for practical use. Luckily that is possible due to Data Science, Artificial Intelligence (AI), and its subsets.

Data science: the Four stages

- Math and statistics

- specialized programming

- advanced analytics

- AI

- storytelling

Data science includes below-mentioned four stages:

- Collection

- Cleaning

- Preprocessing

- Modeling/analytics

To uncover all the mysteries behind Data science for people outside the field, research writers from WriteMyPapers will explain all the stages of Data science.

1. Data collection

The first stage of Data Science is Data collection. The first stage of Data Science is Data collection. What should you do when you don’t have data you need to work with? You have to either find it online or scrape it manually or extract data from websites.

After collecting the data, you have to save it in a suitable format (SQL database, CSV, Excel, etc.) for further use.

You can find information from various sources:

- Documents & Records

- Websites

- Published Literature

If there isn’t any relevant information, you’ll have to do some observation:

- Human observations

- Experiments

- Surveys

- Sensors/monitors

- Interviews

Data scraping or web scraping is the most effective way of getting data from the web. It is the process of importing information from a website into a spreadsheet or a file on the computer. Data scraping helps to gather different data in one place. It is handy in any case where you deal with information.

Here are some of the most common uses for data scraping:

- Web content or business intelligence research

- Travel booker sites pricing or price comparison

- Crawl public data sources to get sales leads or conduct market research

- Send data from one e-commerce site to another.

What the web scraping process looks like in a few words:

- Find a website containing relevant data and collect the URLs

- Code script to manipulate website HTML and extract (scrape) necessary data fields.

- Store the data in a suitable format (CSV, XLSX, SQL, etc.)

Data scraping is not as simple as you may think when you have a big project. But you can rely on us, as our professional team can gather data for your project, collect and structure it, and deliver it in the format you need.

2. Data cleaning

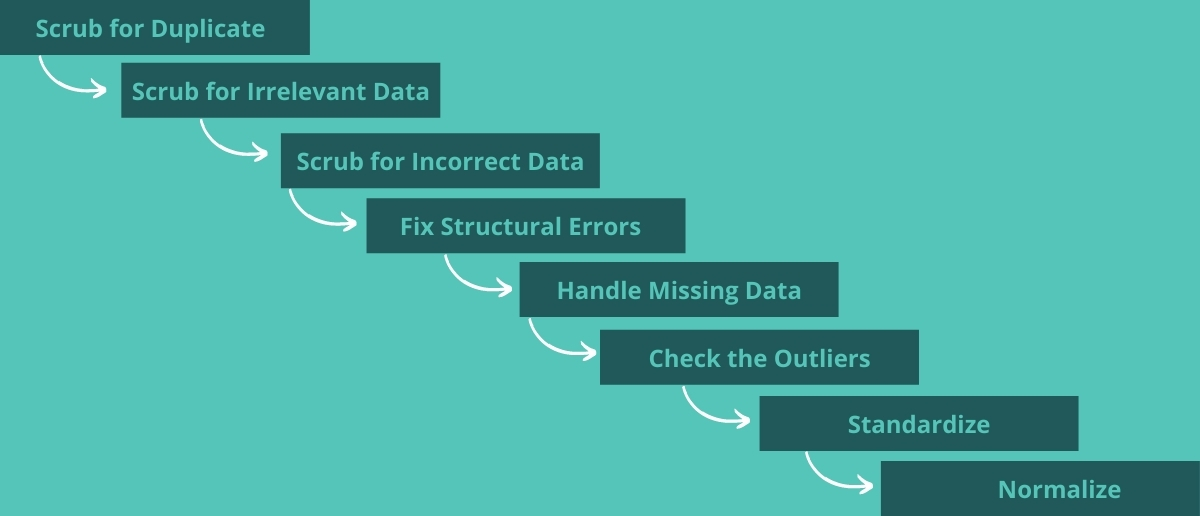

The second stage of Data Science is Data cleaning, which follows the data collection process. It is the process of detecting incorrect, incomplete, or missing data and modifying, replacing, or deleting it.

If there are missing data fields, they need to be handled. There are 3 ways to handle them:

- delete the rows containing missing/corrupt fields.

- If the missing data is numerical, add the mean/median of the given column instead of the missing values.

Deleting the row is not always a good idea because some vital information will be deleted too. It’s preferable to fix them.

Now let’s see the steps you should take to clean the collected data:

- Remove unnecessary columns

- Fix structural errors

- Fix/remove corrupt values

- Validate and QA

False data will lead to incorrect conclusions and will affect your business. So the quality of data is another significant point you should pay attention to.

3. Data preprocessing

When data is collected and transformed into usable information, Data processing is the next process of Data Science. This process comes before feeding the data to machine learning models. Data processing is helpful because it helps machine learning models have better accuracies.

Here are the stages of data preprocessing:

- Outlier Removal

- Missing Value Imputation

- Normalization

- Subsetting

Outlier Removal

Outliners are extremities in the data. In order to detect outliers for numerical data, you should find data points that are numerically far from the mean of the given column.

To detect and remove the outliers use methods such as the interquartile range method

Optionally, you can replace outliers with the mean/median of the given column.

Missing Value Imputation

You can either impute/fill missing values or delete them. If there are many missing values, it is not recommended to delete them. Missing value imputation can also be done in the data cleaning stage.

To fill missing values, it is recommended to put mean/median in the column. Median or mean must be based on data distribution.

Fill missing values using ML model (Machine Learning) to predict given columns using other columns.

Normalization

Data Normalization means transforming all the data into the same scale.

Different columns can have numerical values with very different scales, which is why normalization is essential. You’ll have to scale all numerical columns to a 0-1 range.

Subsetting

Subsetting includes the process of splitting the data into training and testing sets. These sets allow us to test the accuracy of ML models on new data (test set) after training and checking the accuracy on the training set.

4. Data modeling/analytics

- the process of visualizing the data after it went through the processes mentioned above

- the process of feeding the data into machine learning models.

- both of them

Stop wasting your time and resources on inefficient processes, because they can easily be managed. With artificial intelligence and machine learning, we transform scattered, random data into valuable insights. Business processes can be assisted to handle daily activities logically, and services can be implemented across existing technologies with zero compatibility errors.

We adapt to the future technological trends of artificial intelligence development. Moreover, our Artificial Intelligence development team will help you identify the areas that can benefit most from artificial intelligence solutions and implement them to ensure that you achieve the desired returns.

If you are interested or have any questions feel free to contact us